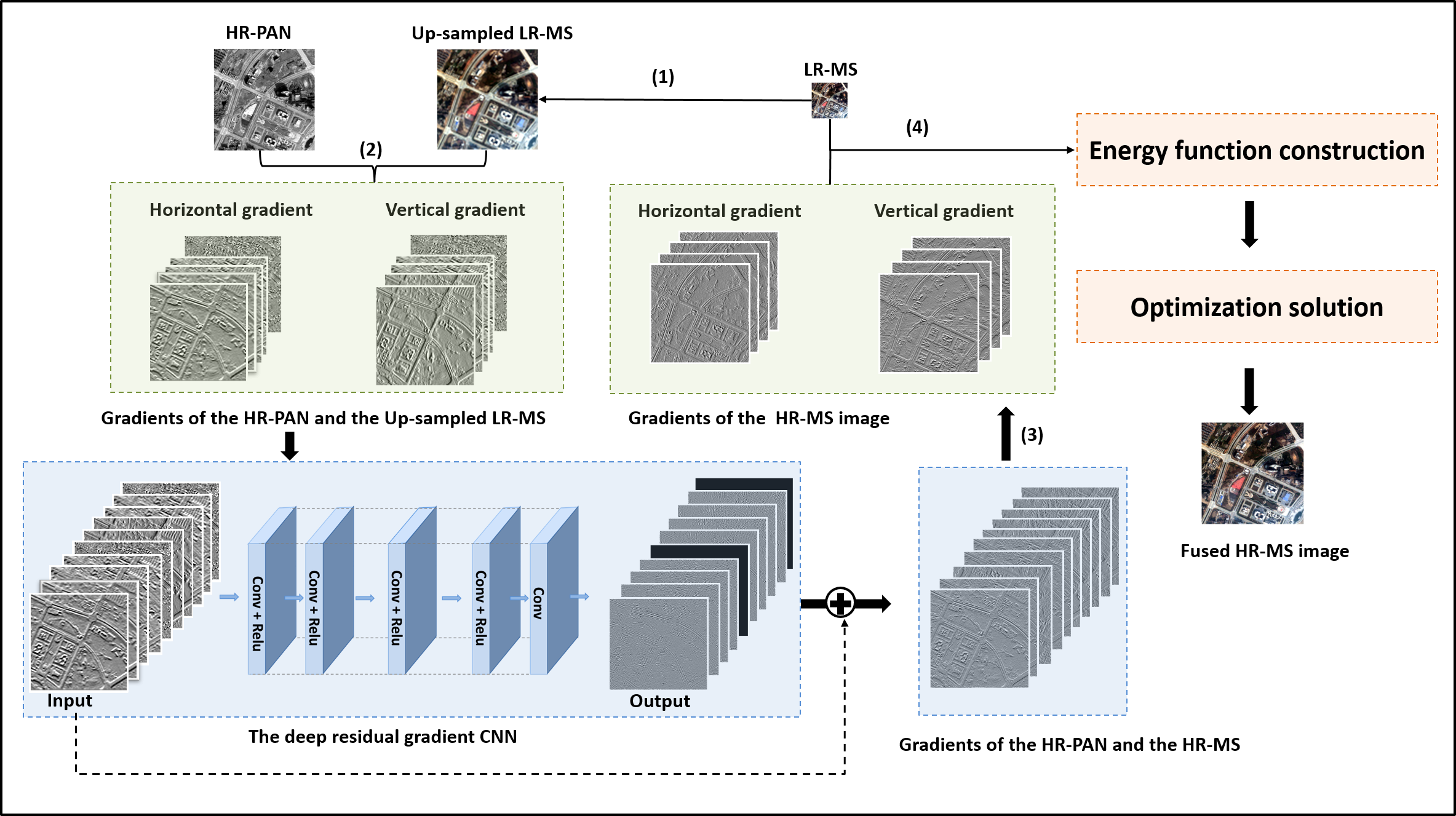

In the field of spatial–spectral fusion, the variational model-based methods and the deep learning (DL)-based methods are state-of-the-art approaches. This paper presents a fusion method that combines the deep neural network with a variational model for the most common case of spatial–spectral fusion: panchromatic (PAN)/multispectral (MS) fusion. Specifically, a deep residual convolutional neural network (CNN) is first trained to learn the gradient features of the high spatial resolution multispectral image (HR-MS). The image observation variational models are then formulated to describe the relationships of the ideal fused image, the observed low spatial resolution multispectral image (LR-MS) image, and the gradient priors learned before. Then, fusion result can then be obtained by solving the fusion variational model. Both quantitative and visual assessments on high-quality images from various sources demonstrate that the proposed fusion method is superior to all the mainstream algorithms included in the comparison, in terms of overall fusion accuracy. Figgure. 1 is the framework of the proposed fusion method.

Figure 1. Flowchart of the proposed method.

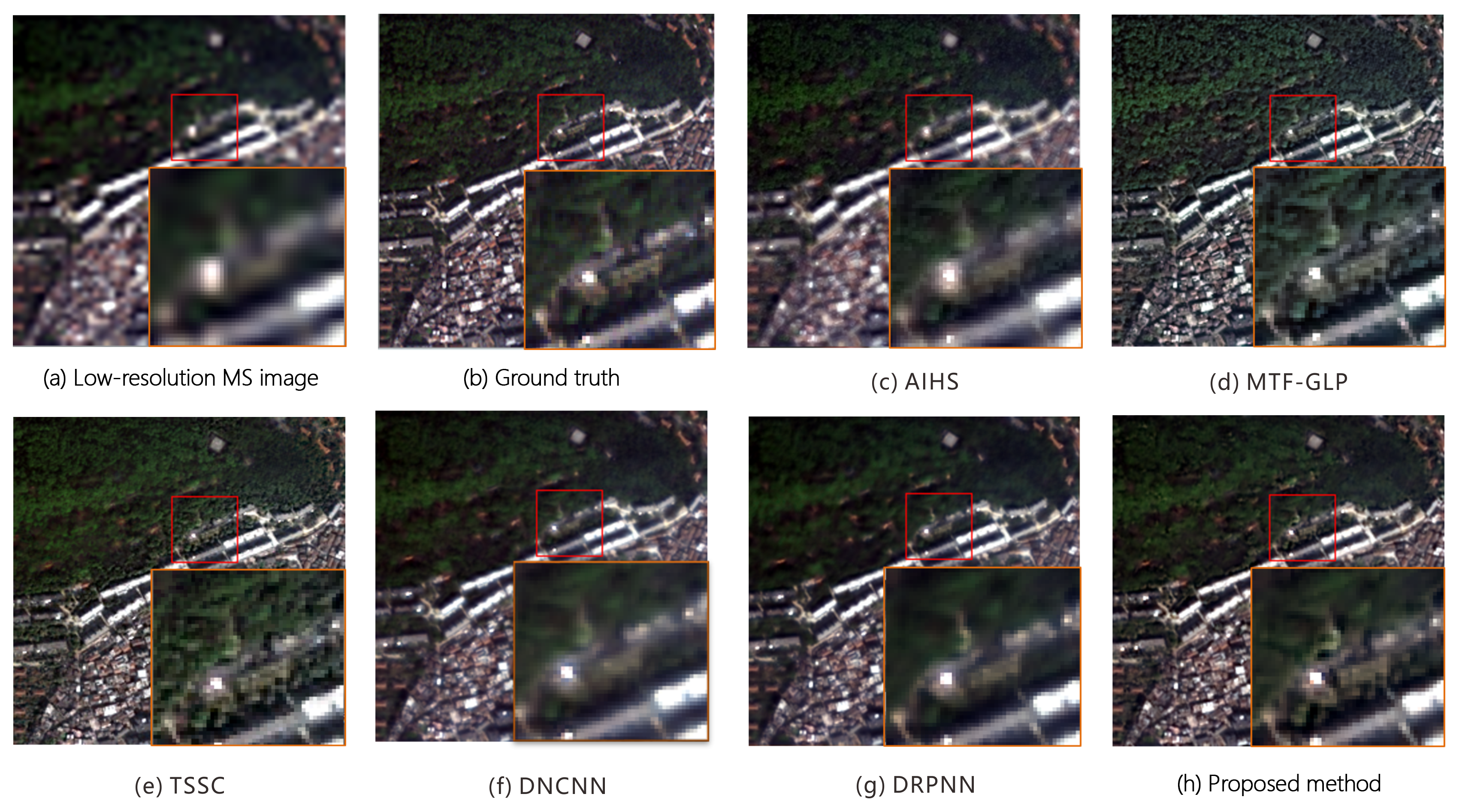

Figure 2. Simulated fusion results for the QuickBird image.(a) Low-resolution MS image simulated by downsampling. (b) Ground truth. (c) AIHS. (d) MTF-GLP-HPM. (e) TSSC. (f) DNCNN. (g) DRPNN. (h) Proposed method

查看详细>> H. Shen, M. Jiang, J. Li, Q. Yuan, Y. Wei, and L. Zhang, “Spatial-Spectral Fusion by Combining Deep Learning and Variational Model,” IEEE Transactions on Geoscience and Remote Sensing, 2019, 57(8): 6169 – 6181.